Integration with Gemini solves two main tasks:

1) Automatically populating data fields by analyzing user messages with Gemini. This includes filling custom case and user fields like "Dropdown" and "Checkbox", as well as the standard "Group" field;

2) Sending custom requests to Gemini for analysis — for example, you can forward a user’s message and then use the response from Gemini in your workflow.

We do not charge any additional fees for connecting or using the integration with Gemini, but you will need access to the Gemini API. The free tier subscription includes quite strict usage limits: the exact numbers depend on the chosen model, but most likely this option is suitable only for testing or for solving specific tasks in small businesses.

In addition, when using the free subscription, requests to the model, according to Google policy, are processed on a residual basis and often return errors such as "The model is overloaded. Please try again later" and/or "You exceeded your current quota…"

If you observe frequent failures:

- consider switching to Gemini 2.0 Flash or Gemini 2.5 Flash-Lite, which are less resource-intensive.

- subscribe to pay-as-yo-go plan for stable operation.

Commercial use, even in small companies, will almost certainly require a pay-as-you-go subscription with payment based on usage to Google.

Gemini pricing is tied to the volume of text in the request and response. Billing is calculated in tokens, where each token corresponds to about 4 Latin characters or 1–2 Cyrillic characters, including spaces and punctuation. For example, the word "Gemini" consumes one token. The cost is determined by the total number of tokens used to process the request and generate the response. The final price may also be affected by the thinking mode.

The following limits apply when working with the Gemini API:

- Quotas — functional limits that can be changed in the project settings within your Google Cloud account;

- System limits — technical restrictions due to the architecture of Google Cloud. These limits cannot be changed.

You can learn more about the principles of pricing and billing for Gemini API usage here.

Table of contents

Integration setup

Google Cloud Platform is Google’s cloud platform offering a wide range of services for data storage, computing, machine learning, and other tasks. In GCP, resources (virtual machines, databases, AI tools, etc.) are organized within GCP projects—isolated logical containers. These projects allow grouping resources, configuring access rights, managing permissions to various APIs and Google Cloud services, and so on.

Working with the Gemini API requires an active GCP project to manage resources and access the necessary tools.

Step 1: Log in to your Google Cloud account. Ensure your Google Cloud account has an active GCP project or create a new one. We show how to do this in the video tutorial:

Step 2: Log in to the Google AI Studio console. Create an API key by following these steps: Get API key → Create API key → Search Google Cloud projects → Create API key in an existing project, then copy the key.

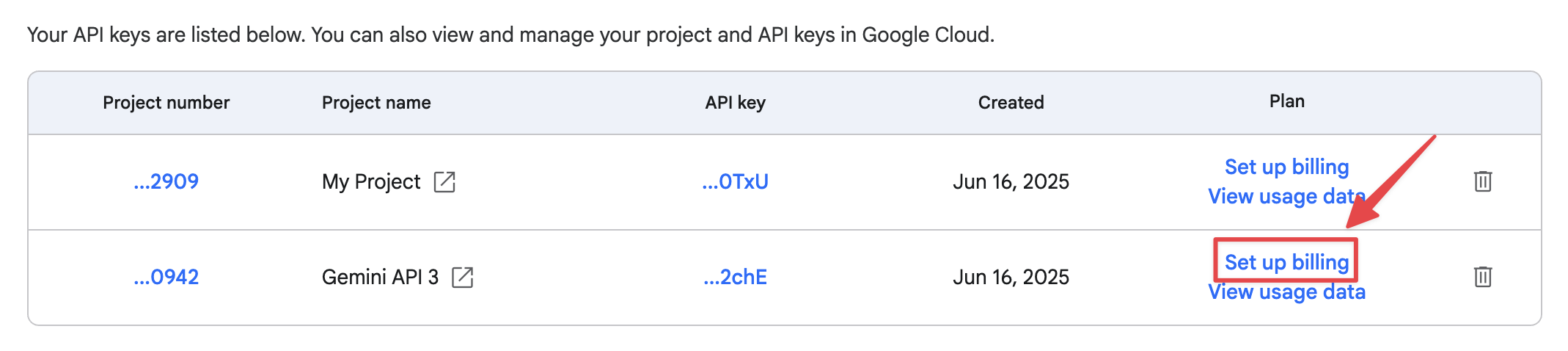

Use the "Set up billing" link in the API key description to link your Google Billing Account to the GCP project where the key was created, thereby activating the pay-as-you-go subscription option for the Gemini API:

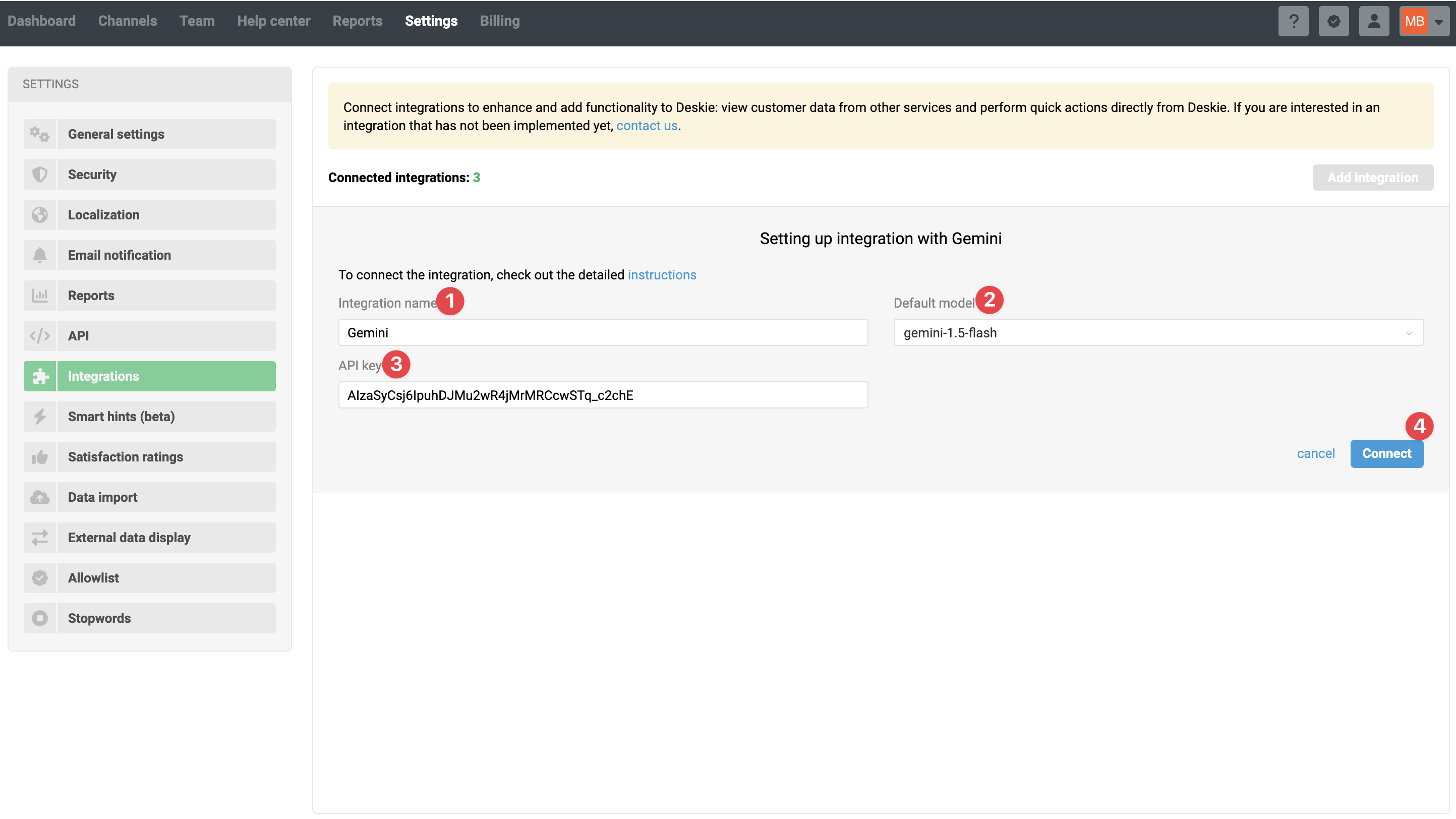

Step 3: In your Deskie admin account, navigate to Settings → Integrations → Gemini. Give your integration a name, select the default model, and enter the API key from the Google AI Studio console that you obtained in Step 2.

Which language model to choose?

A language model is an algorithm that analyzes text, understands its context, processes it, and generates new text. The more complex the language model, the more effective it is — but at the same time, it is billed according to a more complex scheme, which means it is more expensive.

When connecting the integration, you can choose which model will be used for requests by default. The integration supports:

- Gemini 2.0 Flash-lite

- Gemini 2.0 Flash

- Gemini 2.5 Flash-Lite

- Gemini 2.5 Flash

- Gemini 2.5 Pro

The default language model in the integration settings can be changed at any time.

Starting from September 24, 2025, Google no longer supports Gemini 1.5 Flash, Gemini 1.5 Flash-8B, and Gemini 1.5 Pro models. If you have one of these models selected in your integration settings, we will automatically replace it with Gemini 2.5 Flash-Lite.

You can learn about the model lifecycle in Google's official documentation.

Thinking model

The Gemini 2.5 Pro and Gemini 2.5 Flash models include an internal tool called thinking mode, which assists in solving complex tasks. However, this mode consumes additional tokens, which increases both the cost and the response time of requests.

For the types of tasks handled by the Gemini integration in Deskie, using this tool is not necessary. Therefore, for the rule actions "Automatically determine Group/List/Checkbox" and "Send text request to AI", we have disabled thinking mode. If a model does not support this feature, we set the minimal allowable value to minimize the impact of this parameter on request costs.

When sending a custom request to the AI, you need to consider the model’s parameters and, if necessary, specify the thinkingBudget parameter within the allowed range for that model. For models that allow this parameter to be disabled, we recommend setting "thinkingBudget": 0. For models where this parameter cannot be disabled — for example, Gemini 2.5 Pro — specify the minimum allowable value as indicated in the documentation.

Using Gemini in rules

After connecting the Gemini integration, new conditions and actions appear in Deskie’s automation rules, allowing you to configure the integration to work according to your specific scenarios.

Before you start configuring, we recommend checking out our article on the overall logic of automation rules in Deskie or watching the quick video guide on rules.

Actions

To avoid duplicate requests and, consequently, unnecessary token usage, each action in the "— AI integration" category can only be included once within a single rule.

1) Automatically determine "Group"

Available in rules for new and updated cases, this action sends a standard request to Gemini to analyze the message. Based on Gemini's response, the most appropriate value for the standard "Group" field is automatically determined and then set in the case's parameters:

In rules for new cases, the text of the first message in the case is sent for analysis;

In rules for updated cases, the text of the user’s latest message in the case is sent for analysis.

2) Automatically determine "Dropdown / Checkbox"

Available in rules for new and updated cases, this action sends a standard request to Gemini to analyze the message. Based on Gemini's response, the most appropriate value for a custom case or user field of the types "Dropdown" or "Checkbox" is automatically determined and then set in the case's parameters.

In rules for new cases, the text of the first message in the case is sent for analysis;

In rules for updated cases, the text of the user’s latest message in the case is sent for analysis.

Example of how the rule works:

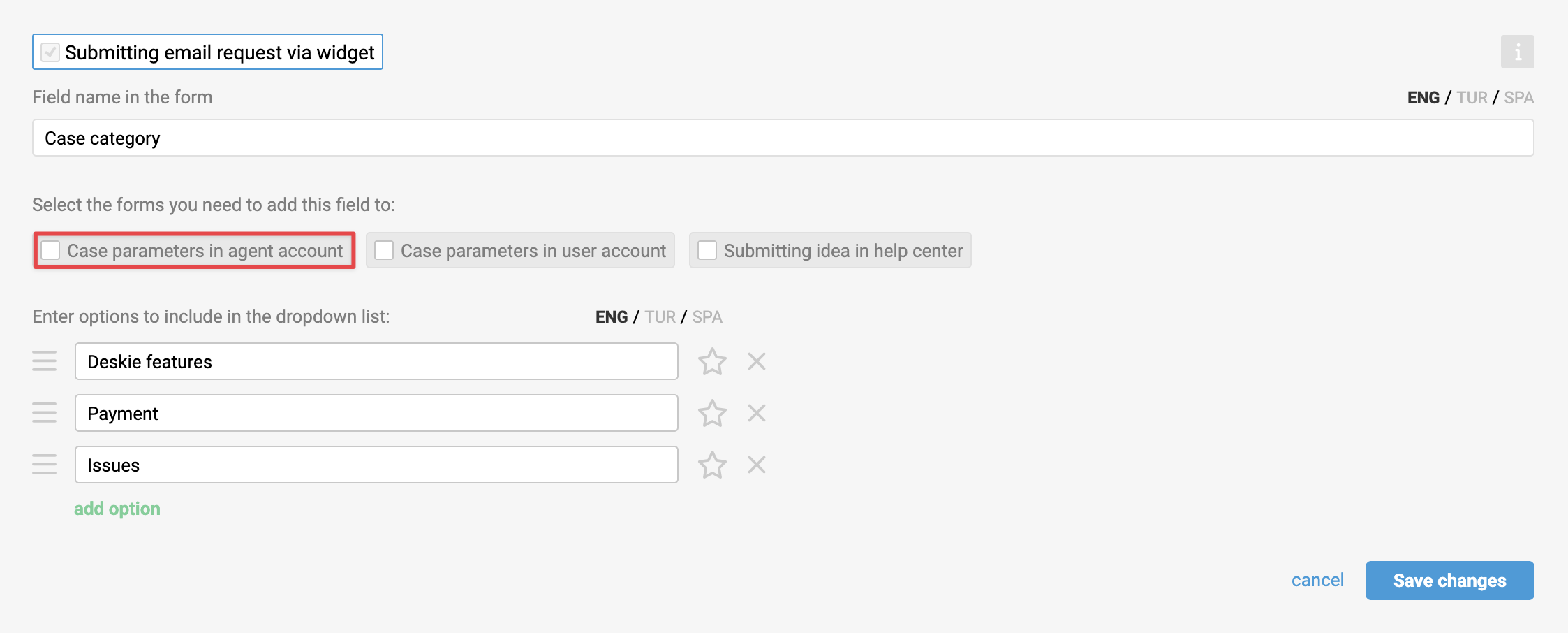

Data field configuration

The data fields you plan to populate using AI should be created in advance and added to the form so they appear on the case page in the agent’s account. To ensure the integration works smoothly, please make sure that:

field names and values are unique and do not repeat;

if multilingualism is used, field names and values are provided in all supported languages and are unique;

field names and values start and end with a character, not a space.

Possible issues

Not following the data field configuration guidelines increases the chances of encountering the following issues:

a) AI returns an invalid value

If the AI returns a value for a "Dropdown" field that doesn’t exist in Deskie, or if a "Checkbox" field’s value isn’t one of the expected numeric states ("1" or "0"), the field won’t be populated. Instead, this action will be logged in the activity history as:

Automatically determine "[Field_name]": The AI response value doesn’t match any of the allowed values for this field (tokens used — [n])

b) AI returns an empty value

If the AI ignores processing a particular field and doesn’t provide a value for it in the response, the field won’t be populated in Deskie. Instead, this will be logged in the activity history as:

Automatically determine "[Field_Name]": AI did not determine a value due to insufficient data (tokens used — [n])

c) The field is not activated for the "Case parameters in agent account" form

If a field isn’t set up to show in case parameters on the agent account page, you won’t see any records of the AI automatically populating or updating that field in the activity history. That said, the action still takes place behind the scenes. So, if you enable the field to display on the case page later, it will be populated with the AI’s value, and the corresponding entry will then appear in the activity history.

3) Send text request to AI

Can be used in any type of rule and sends to Gemini the text request you write plus the content of the user’s or agent's message that needs to be analyzed. You can specify which message to include by using variables:

[case_description] – first message in a case;

[last_message] – last message in a case;

- [note_text] – last note in a case.

Deskie only records the response received from Gemini for the duration of the rule execution through the variable [ai_response_by_text_request]. This variable can be used to, via the rule, have the AI response:

sent to the user;

added to a note;

recorded in a case field of the type "text field" or "text area".

Example of a text request:

Translate into English: [case_description]

Example of how the rule works:

4) Send custom request to AI

Available in all types of rules, it sends to Gemini your custom JSON-formatted request along with the content of the user’s or agent's message that needs to be analyzed. You can specify the message you want using variables:

[case_description] — first message in a case;

[last_message] — last message in a case;

- [note_text] – last note in a case.

To send only part of a message in the request, you can specify a character limit using a vertical bar, for example: [case_description|limit1000] or [last_message|limit1000].

Deskie only records the response received from Gemini for the duration of the rule execution through the variable [ai_response_by_custom_request]. This variable can be used to, via the rule, have the AI response:

sent to the user;

added to a note;

recorded in a case field of the type "text field" or "text area".

In custom requests, you can use the following additional parameters to control the response generation from Gemini:

responseMimeType — the content type that Gemini returns in response to a request; since the integration involves processing text, it is necessary to specify the value "text/plain" to avoid receiving responses in an unwanted format, such as JSON;

maxOutputTokens — the maximum length of the response in tokens; specifying this parameter helps control the length of generated responses and thereby optimize costs;

temperature — a parameter with a range of values from 0.1 to 2 that determines the degree of randomness when generating a response. Its effect is especially noticeable when using topP and topK, as together these parameters influence the degree of diversity and creativity in the responses. Low values (closer to 0.1) make responses more deterministic and precise, which is suitable for tasks requiring strict logic or accurate data. High values (closer to 2) add more randomness and creativity, making responses more diverse and imaginative;

topP (nucleus sampling method) — a parameter that limits the selection of response variations by considering only those whose cumulative probability falls within the specified percentage (P). For example, at topP = 0.9, only those options that together cover 90% of the probability are considered. This allows achieving a balance between randomness and focus, excluding unlikely but still possible options;

topK — a parameter that limits the selection of response variations by considering only the K most probable options. For example, at topK = 50, the choice will be made only among the 50 options with the highest probability. This helps focus on the most relevant options, excluding the rest. Both parameters are used to control the quality and diversity of generation: topP makes the selection more flexible, focusing on cumulative probability, while topK more strictly limits the selection to a specific number of options;

- thinking mode — a "reflection" tool that helps solve complex tasks. If the model selected in your settings supports this tool, when sending a custom request to the AI you need to specify the thinkingBudget parameter within the model's allowed range. Learn more

Read more about additional parameters, their scope, and ways to use them here.

Example of a custom request text for models without thinking mode support:

{

"contents": [

{

"role": "user",

"parts": [

{

"text": "You act as a text analyst. Identify two key phrases in: [last_message]. Return only the phrases themselves in your response and do not comment in any way."

}

]

}

],

"generationConfig": {

"responseMimeType": "text/plain",

"maxOutputTokens": 100

}

}

Example of a custom request text for models with the thinking mode support:

{

"contents": [

{

"role": "user",

"parts": [

{

"text": "You act as a text analyst. Identify two key phrases in: [last_message]. Return only the phrases themselves in your response and do not comment them in any way."

}

]

}

],

"generationConfig": {

"thinkingConfig": {

"thinkingBudget": 128

},

"responseMimeType": "text/plain"

}

}

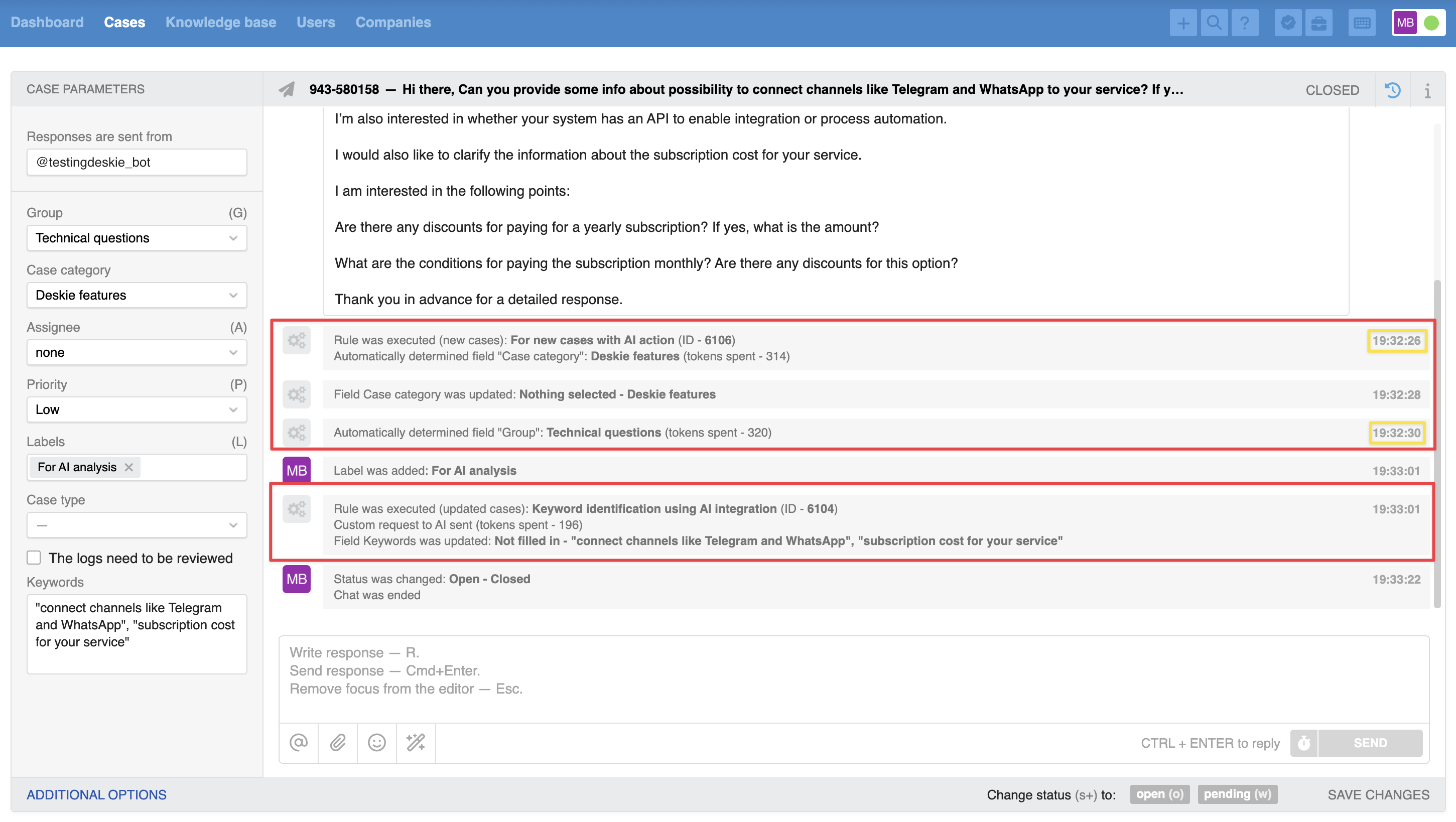

Example of how the rule works:

At the code level, passing a variable’s text value to the AI service endpoint is possible for all variables available in the rule settings.

However, the integration has been optimized specifically for the variables [case_description] and [last_message].

Using other variables may lead to:

irrelevant AI responses,

errors due to exceeding the context window limit,

other unpredictable results.

If you require more complex custom logic—with full case context and additional inputs—we recommend handling such tasks via the OpenAI Assistant. Learn more

Conditions

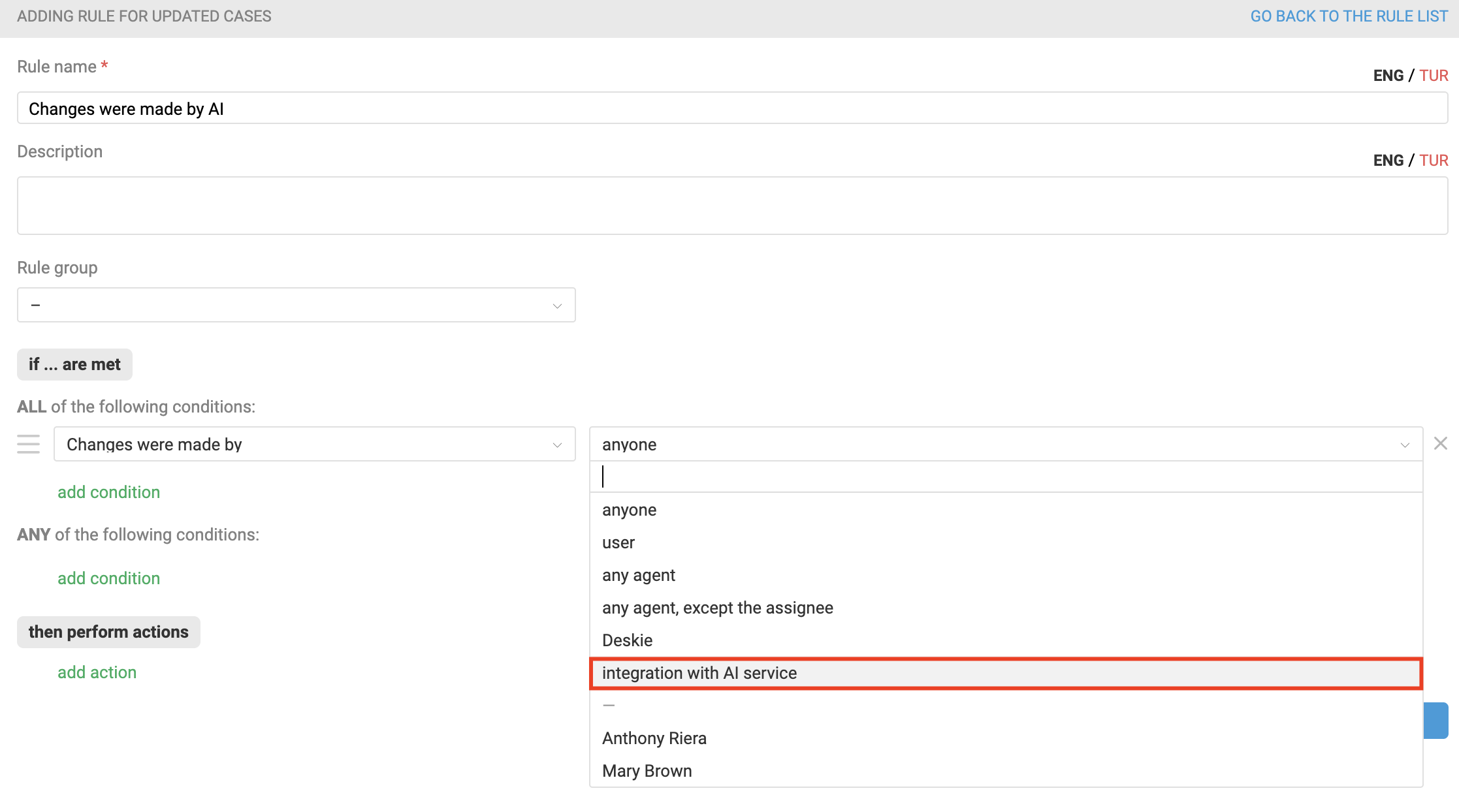

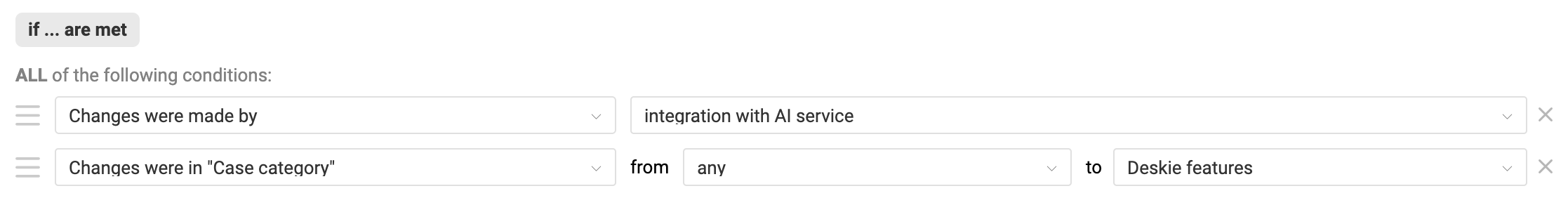

1) Changes were made by — integration with AI service

Available in the rules for updated cases as an option under the condition "Changes were made by". This condition lets you track changes in case fields that happened as a result of actions from the "– integration with AI" category, which are available in the rules for new cases.

a) If a rule includes the condition "Changes were made by – integration with AI service" + a condition tracking a change in the value of one of the data fields, then these conditions are connected by a logical "AND" operator — meaning the rule will only be triggered if the AI service made the specified change.

If the field change was made by an agent, user, or another rule without AI involvement, the rule will NOT be triggered.

Let’s clarify with a concrete example. You have a rule for updated cases that is set to be triggered when the value of a custom field "Case category" changes, but only if AI made those changes.

You also have two rules for new cases that modify the "Case category" field. However, one of these rules does this by sending a request to Gemini, which responds with the appropriate value for this field, while the other changes the field value using the standard action "Change [custom field name]".

In the first scenario, your rule for updated cases will be triggered, but in the second scenario, it won't.

b) To avoid looping, the condition "Changes were made by — integration with AI service" cannot be used simultaneously with an action from the category "— integration with AI". It is also mandatory to select one of the conditions from the category "— changes in case" so that the rule tracks specific changes rather than any change in the case.

c) When the condition "Changes were made by – anyone" is selected, it is not possible to choose AI actions.

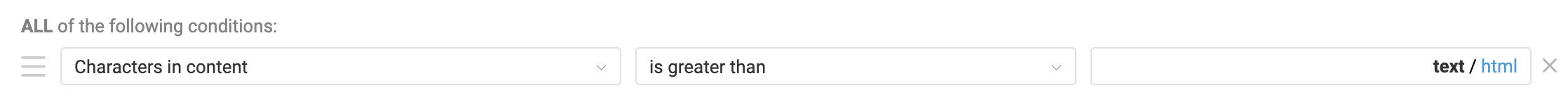

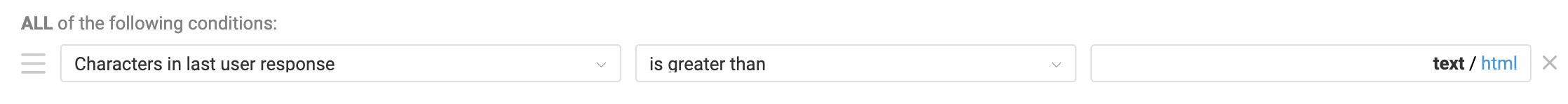

2) Characters in content / last user response

Available in all rule types, this allows you to track the size of the user's message. This is necessary to account for situations where rules executing requests to Gemini should NOT be triggered. For example, no point in sending a chat message to Gemini definitely does not contain anything useful ("Hello", "I have a question" etc.).

You can choose to count characters either for the text only or including html tags.

In rules for new cases, the condition checks the size of the user's first message, so it is called "Characters in content — is greater than / less than / equal to":

In rules for updated and existing cases, the condition checks the user's last message, so it is called "Characters in last user response — is greater than / less than / equal to":

To reduce your costs and simultaneously prevent potential errors on the AI's part, we always pass clean text without HTML tags to the Gemini request instead of the [case_description] and [last_message] variables

Features of change log recording in a case

The process of fully executing an automation rule that includes actions from the "— integration with AI" category takes time. The duration depends on several factors:

the number of actions in the rule;

the volume of data being transmitted;

the response speed of third-party services;

the number of cases in which the rule is running simultaneously.

Operations—sending requests to Gemini, receiving responses from Gemini, making changes to the corresponding cases — are performed in the background. Therefore, the history of AI actions is also recorded in the case in stages, as the actions themselves are performed.

In the case history records of AI actions, we display the status of the request while it is in progress, and after successful completion, we show the number of tokens spent.

Disabling and deleting the integration

If there is more than one active AI integration in the account, when disabling or deleting an integration, the administrator will be prompted to choose:

а) to replace the disabled AI integration in the rules with one of the remaining integrations:

Don’t forget to update the AI request text in your rules, because when replacing a disabled integration (for example, with the "Gemini" service) with another one (for example, with the "OpenAI" service), the request body in the "Send custom request to AI" action is cleared.

b) delete all rule actions related to the AI integration being disabled:

Tell us how you use AI integration in your work. If it might be helpful to others, we will definitely write about it :)